RIP Blitzscaling

The past 14 years have been an absolute windfall for venture capitalists and startups. The VC market 10x’d. GPs sprung up everywhere. Startups with questionable business models raised hilarious amounts of capital, bloating to sky-high valuations without even flirting with profitability. There has been so much capital sloshing around that startups have been selling to other startups.

The hype word everyone was flexing was “blitzscaling”— growth at any cost. Forget efficiency, forget product, instead spend on ads and hype. It felt, surely to them, like a party.

“Let’s go crazy.” — Prince

We’ve certainly been partying like it’s 1999. But every party has its hangover. For me, at least.

Consider March 10, 2000. The Nasdaq peaked and then subsequently dropped 80%. The hype? Pretty similar to today with AI. Lots of folks said we were in a bubble (me). But to others, the “New Economy” was going to change everything.

It didn’t.

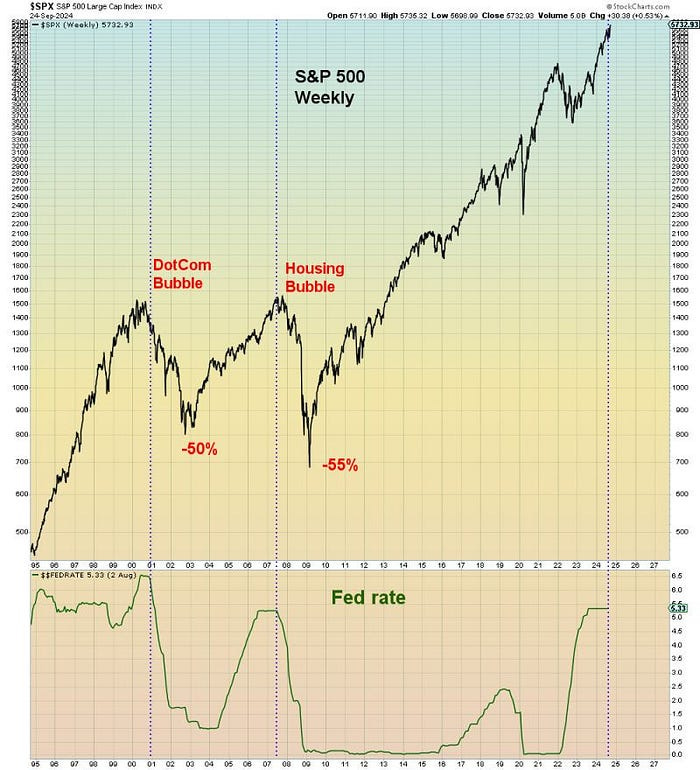

Then came the Great Financial Crisis of 2007. The Fed responded with insane levels of monetary stimulus. Interest rates dropped to nearly zero, and liquidity flooded the system. Enter the VC boom era. Cheap capital, risky bets.

Then Covid hit, and the Fed went absolutely stark raving mad. They 5x’d the US money supply, printing approximately 80% of all U.S. dollars in existence. The money supply increased from $4 trillion in early 2020 to over $20 trillion by late 2021.

Upon this further absurdity, LPs and VCs were ripping rails like Rick James. Now they’re coming down (last 16 months or so) and peeping around for the next party.

So, what’s next?

At this point, I really wouldn’t be surprised if the Fed decided they needed to create $500 trillion in stimulus. If you’re going to create wealth inequality, why stop now at the highest levels in history? REALLY go for it! The key is “greater worker insecurity” (Greenspan) which leads to wage stagnation — one thing they nailed.

And the Fed is already lowering rates. Hmmm… what happened the last two times they started lowering rates when they were over 5%?

The Rise of GenAI

Enter GenAI. This time is different — as it always is. Except it actually is — in a way.

To be clear, by GenAI, I mean LLMs, and other AI systems that use text, video, audio, and other sensory information to produce text, images, videos, code, audio, music, 3D models, and the like.

Have you ever driven by a commercial development and it’s just dirt and tractors roaming around for months on end? Pushing dirt around. What are they even doing? Get to work guys!

And then one day, you drive by and there’s a skyscraper skeleton, and you think, ‘Hey they finally got some work done!”

That’s what GenAI is. The groundwork has to be laid. Once it’s up, people will wonder what hit them.

Yes, AI is a bubble. But it will change the world, eventually.

It’s a land grab. Do you remember in the late 1990s and early 2000s, Level 3 Communications, Global Crossing, Qwest Communications, and WorldCom (which later became infamous for its accounting scandal) laid down something like 4 times the projected amount of fiber-optic infrastructure than necessary? The narrative was that the internet’s rapid growth would lead to an explosion requiring that amount of bandwidth.

But the real story is none of them wanted to lose. Yet they did. Global Crossing and WorldCom went bankrupt, and even Level 3 struggled for a time, though it managed to survive.

This race resulted in an enormous surplus of “dark fiber” — fiber-optic cables that were installed but were unused for years. It wasn’t until the mid-2000s and later, with the rise of video streaming, cloud computing, and mobile data consumption, that the demand for bandwidth caught up to the infrastructure.

AI infrastructure is undergoing the same type of race. And remember, this time there’s 20 trillion floating around. It’s better to lose some of that than get left behind.

The unfortunate part is we spent almost all of the absurd 14-year stimulus on things that didn’t lay a good foundation for what’s ahead — the Metaverse, Crypto and ICOs, 3D printing, etc. We squandered it. None of these things were the sitework and grubbing and excavation needed for an AI future. Well, blockchain will come back around.

The VC High tide will now begin its slow retreat. That’s good because it means you can focus on building things that provide value and make money rather than focusing on not getting out-raised by some grifter.

Focus on Agentic AI

Many people who have used ChatGPT think “Well, this is cool, but it’s not really useful for business. It’s all hype!”

Let me explain why it isn’t.

ChatGPT works as a zero-shot inference system which means it doesn’t need examples to generate responses. You ask, it answers — simple. Yes, it has a context window which technically makes it one or many shot, but it’s still human to AI.

In the future, (even some new systems today, such as Andrew Ng’s Vision Agent), AI won’t work like this. Instead, it will be “many AI to many AI” interaction in complex systems with hundreds, millions, or billions of ‘AI Agents’ speaking to one another.

“Wait!” you say. “If AI systems return garbage much of the time how can you have millions of these things talking to each other? Won’t that just devolve into nonsense?”

Rather than bore you with abstractions, I’ll bore you with a practical example by writing an agentic workflow. Don’t worry, no technical expertise is needed (which is the point).

One thing first: In traditional software, a system’s job (or subsystem, program, method, etc) is to return a response based on a state transition. Input “2+2,” and get “4.” It’s causal and predictable. This design enforces logical, sequential transitions within the system.

Currently, foundational models GPT-4 (OpenAI), Claude 2 (Anthropic), PaLM 2 Google DeepMind), LLaMA 2 (Meta) are not typical state transitions because interpreting the output is ambiguous. So the first step is to reduce the ambiguity and add scale and redundancy to get to a reliable state transition architecture. That’s a lot of what agentic AI workflows do.

Creating The ‘Going For a Walk’ AI Agent

Is now a good time for a walk? Let’s create 3 AI agents to find out.

You can skim this part to get the idea.

User Interaction (Agent 1): This is you in this example, but in an AI system it would be an agent that calls the other two agents.

Weather Data (Agent 2): Prompt: “I am in the ______ area of (city), (state). Please check the hourly weather for the next 2 hours. Respond in this way: Hour 1 Temp: (a number), Hour 2 Temp: (a number), Hour 1 chance of precipitation: (a percentage), Hour 2 Change of precipitation, Hour 1: Humidity: (number), Hour 2: Humidity: (a number)”

Result A: Here is the hourly weather forecast for Buckhead, Atlanta, GA over the next two hours: Hour 1: Temp: 71°F, Chance of precipitation: 0%, Humidity: 78%. Hour 2: Temp: 70°F, Chance of precipitation: 0%, Humidity: 80%. The weather is expected to be mostly clear with no rain in the near future.

Decision Agent 3: Prompt: “You will decide whether it’s okay for me to go for a walk. I only walk when there’s no precipitation, when the temperature is between 40–85°F and when the humidity is less than 88%. Here’s the weather for the next two hours: ” + (Result A above) + “Only respond with one or two words: ‘walk’ or ‘no walk.’

Result B: Walk

See? A state transition. Easy.

Add Redundancy

To increase accuracy, we next create even more AI agents. Let’s add a CheckWeather Agent which asks three different Weather Data Agents to check the weather. Each Weather Data Agent checks a different source — Accuweather, a private company; NOAA, a government entity, and The Weather Company, run by IBM.

The CheckWeatherAgent then sends each of these results to 3 different Decision Agents which each run on a different foundational model — one on GPT, one on Claude, and one on LLaMA. CheckWeather Agent then verifies the response and provides an answer — walk — a clear state transition. If inconsistencies are found, there’s a workflow for that too, and the final result is still ‘walk’ or ‘no walk.’

And so on. Pretty boring, and you can easily accomplish this with regular software, of course. But imagine an AI system with a similar agentic workflow whose job it is to read hundreds of thousands of pdf files and produce financial forecasts.

Through decomposition — creating an Agentic AI Workflow — and traditional software architecture — state transitions, lots of testing, training and retraining models, organizing and cleaning data — the overall result will be a new era of software capable of much more powerful things.

The Opportunity of a Lifetime

As we wade into the ebbing tide of capital markets, driven by comically reckless fed and government actions and worsened by greed, we are staring down a generation-defining era of tech in GenAI.

Days like this don’t come often. You only get a few in a lifetime.

The era of blitzscaling — fueled by cheap money and endless speculative bets — is over.

We are now entering an era driven by people with different values — from the greedy to the gritty.

If that’s you, this is the best time in nearly 20 years to double down on tech without the risk of weaponized money ominously lurking in the background, ready to quash your efforts.

Get to it!

I hope you enjoyed this article! If you’re an executive, founder, or investor looking to apply Generative AI in your business, let’s talk. The future’s moving fast, and I’m here to help you stay ahead of it. Connect with me on LinkedIn.